This blog is the second of a four-part series that examines the basics of AI and its applications from a non-technical perspective.

I. Navigating the Currents of the AI Revolution: A brief look at how we got here and what we can expect from AI

II. AI Basics: The Building Blocks of AI

III. AI Applications in Pharma: A look at how AI is being used in the pharmaceutical industry, from drug discovery to sales & marketing

IV. AI Applications in Marketing Research: A brief overview of how AI is influencing how researchers design, execute, and analyze data in marketing research

In my previous blog, I started a discussion on AI, diving into two core principles that underpin artificial intelligence: machine learning (ML) and deep learning (DL). These methodologies play a crucial role in empowering AI systems to comprehend data, acquire knowledge from it, and subsequently make informed decisions or predictions. In this blog, I go deeper into the mechanisms of how AI learns, makes predictions, and interacts with our world.

Part II: The Building Blocks of AI

With the explosive spread of ChatGPT, artificial intelligence (AI) has captured the imagination of people everywhere. While it has existed in different applications for years, ChatGPT has brought AI into the spotlight. Now, everyone is talking about it. But what is AI? How does it learn? How can a computer read and write in a natural way? How can it recognize images and understand music? These are some of the questions I examine in this blog.

AI: A Blend of CPU, Operating System, and Application Software

When discussing artificial intelligence (AI), the inevitable comparison between humans and computers always seems to arise, and rightfully so. At its core, AI can be likened to a computer or machine capable of independent thinking and learning, much like humans do. However, there’s a crucial distinction – AI isn’t merely programmed to follow specific instructions for each task; rather, it learns and improves from data and experiences over time. But what exactly does this mean?

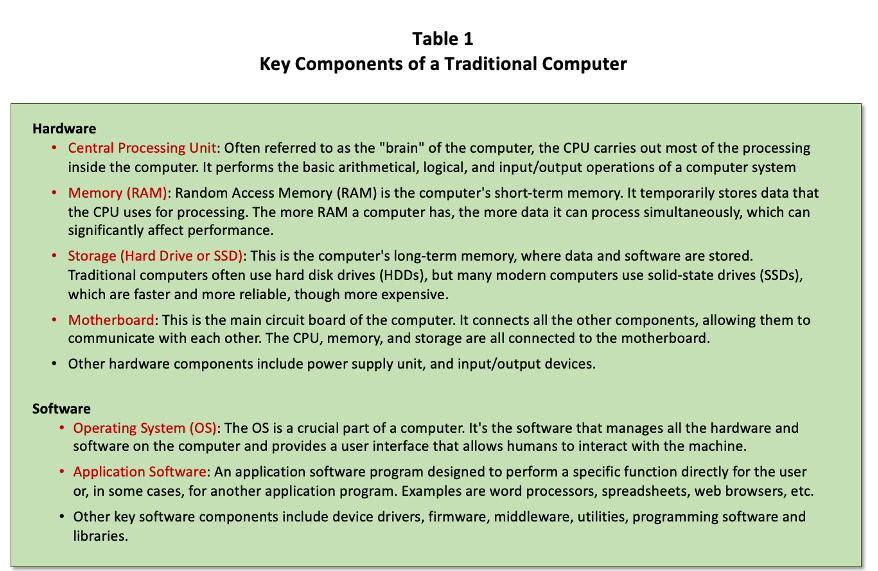

To better understand this, let’s start by comparing it with traditional computers. Over time, computers have evolved from massive room-filling machines of the past to the compact devices we use today. They consist of two primary elements: hardware and software. These components, such as the central processing unit (CPU), memory, storage, motherboard, operating system, and application software, work together to process information and carry out tasks.

Traditional computers operate as rule-based systems, strictly adhering to a set of instructions coded by programmers. If the output isn’t as intended, the underlying code must be modified, a process that can be both time-consuming and expensive. Traditional computers are bound by their initial programming and lack the ability to learn or adapt to new situations.

Initially, I thought AI must be some sort of operating system, application software or even a CPU. Unfortunately, AI doesn’t fit neatly into the analogy of a single component within a regular computer. AI encompasses aspects of all three and goes beyond them in distinctive ways.

- CPU: The CPU executes instructions inside a regular computer. Similarly, AI processes data and performs operations. However, unlike a CPU that follows predefined instructions, AI systems, particularly those using machine learning, can learn from data and adapt their operations accordingly.

- Operating System: The OS manages resources and facilitates interactions between the hardware and software. Like an OS, AI manages and coordinates various tasks, but goes beyond resource management and interaction facilitation. It can make decisions, predict outcomes, and even generate new data, all of which is beyond the scope of an OS.

- Application Software: Application software performs specific tasks based on user input and predefined algorithms. AI can be seen as an advanced form of application software that not only performs tasks but also improves its performance over time through learning. However, unlike traditional software that performs tasks in a rule-based manner, AI can handle ambiguous situations, learn from experience, and even exhibit creativity in tasks like image generation or text composition.

In essence, AI is like a dynamic, learning, and adaptive blend of the CPU, OS, and application software. It processes data (CPU), manages tasks (OS), performs specific functions (application software), and beyond that, it learns from experience and can handle ambiguity, which sets it apart from traditional computing components.

How AI Learns: Algorithms, Data and Trained Models

So how does AI learn? The simplest answer is that it learns using algorithms and statistical models to identify patterns in data. An algorithm is a set of rules or a sequence of instructions that someone can follow to solve a problem or achieve a specific goal. These algorithms iteratively learn from data and adjust the model parameters to minimize the difference between what the model predicted and the actual outcome.

Using algorithms to learn patterns from data and make decisions or predictions based on those patterns is called Machine Learning (ML). This learning process is like how we learn from our experiences and improve over time. AI learns from extensive data exposure. The more data the system is fed, the better it becomes at its task. Once an algorithm is trained on data, it produces a trained model capable of making predictions or decisions based on the patterns it has learned.

For example, an algorithm might be used to predict the price of a house based on various features like its size, location, number of rooms, etc. The algorithm would be trained on a dataset of houses for which the prices are known, and it would learn patterns in this data that relate the features of a house (e.g., square feet, number of rooms, etc.) to its price. Once trained, the algorithm could then predict the price of a new, unseen house based on its features.

Handling Complex Problems with Deep Learning

The previous example is straightforward, but what happens when the data being used is very large and complex? For example, let’s say we needed to translate text from one language to another, like English to Chinese. This is a complex task because languages are highly nuanced and context dependent. Translating word-for-word often fails to convey the original meaning because the structure, idioms, and grammar rules can vary greatly between languages.

For complex problems like this, a more sophisticated approach is needed called deep learning (DL). DL is a subset of machine learning, but the key difference is how data is processed. DL employs artificial neural networks with multiple layers to learn complex patterns in large amounts of data, mimicking the way human brains process information.

Just as our brain comprises billions of interconnected neurons, an artificial neural network consists of layers of artificial neurons, each receiving input, processing it, and passing on the output to the next layer. The final layer generates the output, such as a classification or prediction.

DL excels at recognizing patterns in data, whether it’s identifying edges, shapes, textures in images, or understanding sentiment in text. This pattern recognition and decision-making capability make DL a powerful tool in AI.

Data and the Process of Learning

As mentioned earlier, data is a very important part of training an AI algorithm in order to create a well performing model (i.e., a model that can accurately and consistently do what you want it to do). The data can be anything: text, numbers, images, or sounds. The training process basically involves feeding the model data, adjusting the model’s parameters based on its predictions, and repeating this process until the model’s predictions are as accurate as possible.

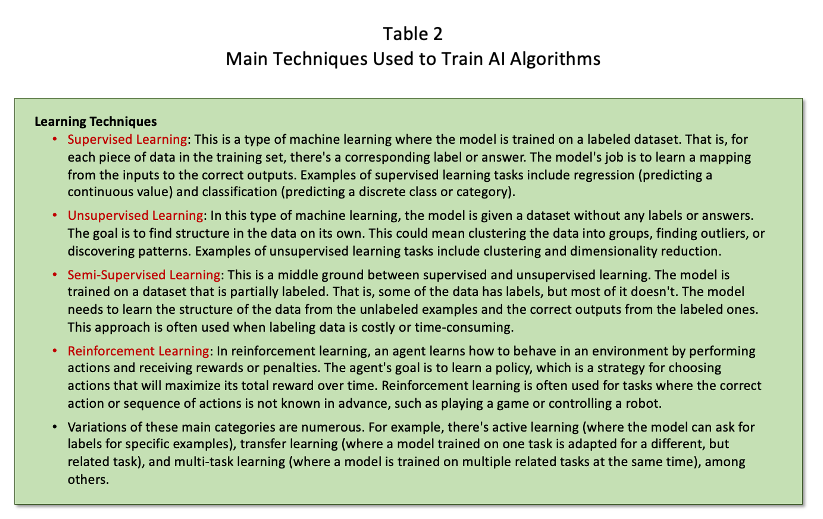

The training process itself can take several forms depending on the specific type of data being used: supervised learning, unsupervised learning, semi-supervised learning, and reinforcement learning.

As shown in Table 2, there are four main categories with many variations and subfields.

A word of caution in the use of data in training a model. Because AI systems are very sensitive to the data that is being used to train it, it is critically important that the highest quality data is used in training an algorithm. If incorrect or biased data is used or if the training is incomplete, the model’s predictions may also be incorrect or biased. This is a major concern because how an AI is coming up with its predictions is happening in a black box for most users. There is a lack of transparency or interpretability in many AI models, particularly in complex models like deep neural networks. These models can make very accurate predictions, but it’s often impossible for the average user to understand why the model made a particular prediction. This lack of transparency can make it hard to detect and correct bias in AI systems.

Interacting with the World: Communicating with Humans

One of the most incredible aspects of AI is its ability to interact with our world: read and understand text, see images, and hear sounds.

The main interface between AI models and humans is through text. Natural Language Processing (NLP) is a critical methodology that enables computers to understand and generate human language. They can process text input from users, understand the meaning and context, and generate human-like responses. They can also analyze text data from the web or other sources to gather information. These tasks allow AI systems to interact with humans in a more natural and intuitive way, making technology more accessible and user-friendly.

AI models can ‘hear’ sounds and recognize speech by processing audio data using a type of Recurrent Neural Network (RNN) like Long Short-Term Memory (LSTM) or Gated Recurrent Units (GRUs), which are good at handling sequential data. Similarly, AI models can also ‘see’ the world by processing pixel data thanks to convolutional neural networks (CNNs).

In a complex AI system, these models might all be used together. For example, an AI assistant like Siri or Alexa would use NLP models to understand your commands, a CNN to interpret visual data if it has access to a camera, and an RNN or LSTM/GRU to process audio data for speech recognition and synthesis.

The beauty of these models is that it not only allows the AI system to interact with the outside world, but it also simplifies how humans interact with the AI.

Conclusion

AI’s learning and operation are built upon a hierarchy of concepts. Algorithms form the base, allowing AI to learn and make decisions. Machine learning, a subset of AI, uses these algorithms to learn from data and make predictions. Deep learning, a further subset of machine learning, employs a specific type of algorithms called artificial networks that mimic the structure and function of the human brain to learn complex patterns in large amounts of data. The specific process of training an algorithm is based on the size and complexity of the data being used. The objective is to create a trained model that has learned patterns from the data, and it can use these patterns to make predictions or decisions.

In the next part of this blog series, I will explore AI’s applications in healthcare, from drug development to sales and marketing. The journey of AI has only just begun, and its possibilities to positively impact humanity are limitless.

About the Author:

Sugata co-founded Cadence Communications & Research, a healthcare focused agency offering medical communications and marketing research services, in 2008 with Laura Smith. Sugata currently heads the market research group at the firm and has spearheaded key strategic initiatives, leveraging his deep technical expertise and industry insights to drive business growth. Prior to Cadence, he served roles in consulting and market research at Andersen Consulting (now Accenture), The Wilkerson Group, Amgen, and ICI.

Sugata co-authored “Management Consulting: A Complete Guide to the Industry” with Daryl Twitchell (1st ed. 1999, 2nd ed. 2002, Wiley) and he regularly shares his expertise through speaking engagements, addressing key topics like healthcare trends, marketing research, and management consulting.

Sugata received his BA in Economics (with honors) from The University of Chicago, an MA in Economics from Utah State University, and an MBA from Yale University. Sugata may be contacted at sbiswas@cadenceresearch.com.

About Cadence Communications & Research:

Cadence Communications & Research is a boutique professional services firm serving the global healthcare industry. Founded in 2008, Cadence offers services in two key interrelated areas: medical communications and market research. Cadence offers Cadence is a certified woman-owned business and has been named to the Inc. 500/5000 fastest growing private companies in America three times. Cadence is a member of Diversity Alliance for Science. For more information, please visit: www.cadencecr.com.

#ArtificialIntelligence, #AIExplained, #AINonTechnical, #AIinPharma, #AIinMarketing, #AIApplications, #AIinMarketResearch, #ChatGPT, #AIRevolution #diversityallianceforscience #marketresearch